Introduction to Apache Hadoop

Problems with the Traditional Approach

In the traditional approach:- not properly handling the heterogeneity of data i.e. structured, semi-structured and unstructured.

The RDBMS focuses mostly on structured data like banking transactions, operational data, etc., and highly consistent, matured systems supported by many companies.

An introduction to Apache Hadoop for big data

Apache Hadoop is an open-source software framework for the storage and large-scale processing of data sets on clusters(groups) of commodity hardware.

Specializes in semi-structured, and unstructured data like text, videos, audio, Facebook posts, logs, etc.

HADOOP Applications are run on large data sets distributed across clusters of commodity computers.

Commodity computers are cheap, widely available, and useful for achieving greater computational power at a low cost.

In Hadoop, data is stored in a distributed file system, called a Hadoop Distributed File system.

Its model is based on the 'Data Locality' concept where computational logic is sent to cluster nodes(servers) containing data.

This computational logic is a compiled version of a program written in a high-level language such as Java.

Hadoop was created by Doug Cutting and Mike Cafarella in 2005 and developed to support distribution for the Nutch search engine project.

Features Of 'Hadoop'

• Suitable for Big Data Analysis

Big Data use for distributed and unstructured in nature,

HADOOP uses clusters for the analysis of Big Data.

it is processing logic (not the actual data) that flows to the computing nodes, and less network bandwidth is consumed.

This concept is called as data locality concept which helps increase the efficiency of Hadoop-based applications.

• Scalability

HADOOP clusters are easily scaled to any extent as adding additional cluster nodes for the growth of Big Data.

Scaling does not require modifications to application logic.

• Fault Tolerance

HADOOP ecosystem replicates (copies) the input data onto other cluster nodes.

If a cluster node fails, data processing can still proceed by using data stored on another cluster node.

Working of Hadoop:-

Hadoop can tie together many commodity computers with single-CPU, as a single functional distributed system, and practically, the clustered machines can read the dataset in parallel and provide much higher throughput.

It is cheaper than one high-end server.

Hadoop runs code across a cluster of computers.

This process includes the following core tasks that Hadoop performs −

• Data is initially divided into directories and files.

• Files are divided into uniform-sized blocks of 128M and 64M (preferably 128M).

• These files are distributed across various cluster nodes for further processing.

• Hadoop Distributed File System (HDFS), being on top of the local file system, supervises the processing.

• Blocks are replicated for handling hardware failure.

• Checking that the code was executed successfully.

• Performing the sort that takes place between the map and reduces stages.

• Sending the sorted data to a certain computer.

• Writing the debugging logs for each job.

Advantages of Hadoop

• Ability to store a large amount of data.

• High flexibility.

• Cost effective.

• High computational power.

• Tasks are independent.

• Linear scaling.

• Quickly write and test distributed systems.

• Efficient:- automatically distributes the data and work across the machines and in turn, utilizes the underlying parallelism of the CPU cores.

• Not rely on hardware to provide fault-tolerance and high availability (FTHA), rather Hadoop library itself has been designed to detect and handle failures at the application layer.

• Servers can be added or removed from the cluster dynamically and Hadoop continues to operate without interruption.

• open source and compatible with all the platforms (Java-based).

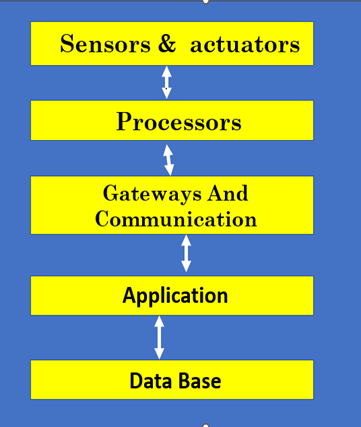

Apache Hadoop framework (Hadoop Architecture)

Hadoop has two major layers −

• Processing/Computation layer (MapReduce), and

• Storage layer (Hadoop Distributed File System).

HADOOP ECOSYSTEM: -

2. Hadoop Distributed File System (HDFS):

3. Hadoop YARN:

4. Hadoop MapReduce:

Disadvantages:

0 Comments